This post is part of an ongoing blog series where Scaled Agile Partners share stories from the field about using Measure and Grow assessments with customers to evaluate progress and identify improvement opportunities.

As business environments feature increasing rates of change and uncertainty, agile ways of working are becoming the dominant way of operating around the globe. The reason for this dominance is not that agile is necessarily the “best” way of working (agile, by definition, embraces the idea that you don’t know what you don’t know) but because businesses have found agile better-suited to addressing today’s challenges. Detailed three-year plans, extensive Gantt charts, and work breakdown structures simply have less relevance in today’s world. Agile, with its emphasis on fast learning and experimentation, has proven itself to be more appropriate for today’s unpredictable business environment.

Agility Requires Data You Can Trust

Whereas a plan-driven approach requires an extensive analysis phase, today’s context demands frequent access to high-quality data and information to facilitate quick course correction and validation. One of these critical sources of data is targeted assessments. The purpose of any assessment is to gather information. And the quality of the information collected is a direct result of the quality of the assessment.

Think of an assessment as a measuring tool. If we were studying a physical object, we might use measuring devices to assess its length, height, mass, and so on. Scientists have developed sophisticated definitions of many of these physical characteristics so we can have a shared understanding of them.

However, people—especially groups of people—are not quite so straightforward to measure: particularly if we’re talking about their attitudes and feelings. It’s not really possible to directly measure concepts like culture and teamwork in the same way we can measure mass or length. Instead, we have to look to the discipline of psychometrics—the field of study dedicated to the construction and validation of assessment instruments—to assist us in measuring these complex topics.

Survey researchers often refer to an assessment or questionnaire as an “instrument,” because the purpose is to measure. We measure to learn, and we learn to apply our knowledge in pursuit of improvement. This is one reason why assessment is such an integral part of the educational system. Properly designed, assessments can be a powerful tool to help us validate our approach, understand our strengths, and identify areas of opportunity.

Ensuring Quality is Built into the Assessment

Since meaningful information is so critical to fast inspection and adaptation, it’s important to use high-quality assessments. After all, if we’re going to leverage insights from the assessments to inform our strategy and guide our decisions, we need to be confident we can trust the data.

How do we know that an assessment instrument is measuring what it purports to? It’s so important to use care when designing the assessment tool, and then use data to provide evidence of both its validity (accuracy) and reliability (precision). Here’s how we ensure quality is built into our assessment.

Step 1: Prototype

All survey instrument development starts with a measurement framework. When Comparative Agility partnered with SAFe® to design the new Business Agility assessment, subject matter experts leveraged their experience from the original Business Agility survey to explore enhancements.

The original Business Agility survey had generated a variety of important insights and proved to be incredibly popular among SAFe customers. But one area of potential improvement was the language used in the assessment itself. Customers wanted to leverage a proven SAFe survey to understand an organization’s current state, without first requiring the organization to have gone through comprehensive training. With the former Business Agility survey, this proved difficult, since the survey instrument often referred to SAFe-specific topics that many had not been exposed to yet.

To address this issue, subject matter experts (SPCTs, SAFe Fellows) teamed up with data scientists from Comparative Agility to craft SAFe survey items that would be meaningful at the start of a SAFe implementation, while avoiding terms that would require prior knowledge. This work resulted in a prototype survey or “minimum viable product.”

Step 2: Test and Validate

Once the new Business Agility survey instrument was developed, we released it to beta and began to collect data. Several people in the SPCT community were asked to participate in a pilot. In follow-up interviews, respondents were asked about their experience with the survey. Together with respondents, the survey design team, and additional subject matter experts, we examined the results. (We also received external feedback from a Gartner researcher to help improve the nomenclature of some of the survey items.) Only once the team has been satisfied with the reliability and validity of the beta survey instrument will it be ready for production.

Step 3: Deploy and Monitor

Even after the Business Agility survey instrument reaches the production phase, the data science team at Comparative Agility and Scaled Agile continuously monitor the assessment for data consistency. A rigorous change management process ensures that any tweaks made to survey language, post-deployment, are tested to ensure they don’t negatively impact the accuracy.

Integrating Flow and Outcomes

Although validated assessments are a critical component of a data-driven approach to continuous improvement, they’re not sufficient. To gain a holistic perspective and complete the feedback loop, it’s also important to measure Flow and Outcomes.

Flow

Flow metrics express how efficient an organization is at delivering value. When operating in complex environments characterized by uncertainty and volatility, flow metrics help organizations identify performance across the end-to-end value stream, so you can identify impediments to agility. A more comprehensive overview of Flow metrics can be found in the SAFe knowledge article, Metrics.

OutcomesFlow metrics may help us deliver quickly and effectively, but without understanding whether we’re delivering value to our customers, we risk simply “delivering crap faster.” Outcome metrics address this challenge by ensuring that we’re creating meaningful value for the end-customer and delivering business benefits. Examples of outcome metrics include revenue impact, customer retention, NPS scores, and Mean Time to Resolution (MTTR).

Embracing a Culture of Data-Driven, Continuous Improvement

It’s important to note that although data and insights help inform our strategy and guide our decisions, to make change stick and ultimately to drive sustainable cultural change, we need to appreciate that data is a means to an end.

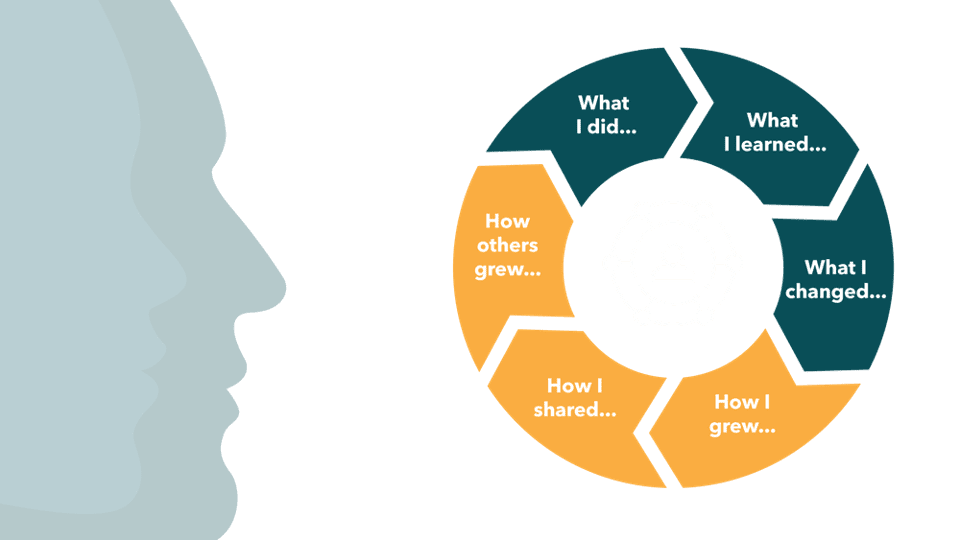

That is, data—even though it’s validated, statistically significant, and of high quality—should be viewed not as a source of answers, but rather as a means to ask better questions and uncover new insights in our interactions with people. By having data guide us in our conversations, interactions, and how we define hypotheses, we can drive a culture of inquiry and continuous improvement.

Just like when a survey helps us better understand how we feel, the assessment provides us with an opportunity to interact in a more meaningful way and increase our understanding. The data itself is not the goal but a way to help us learn faster, adapt quicker, and remove impediments to agility.

Start Improving with Your Own Data

As 17 software industry professionals noted some twenty years ago at a resort in Snowbird, Utah, becoming more agile is about “individuals and interactions over processes and tools.”

To start your own journey of data-driven, continuous improvement today, activate your free Comparative Agility account in the Measure & Grow area of the SAFe Community Platform.

About Matthew

Matthew Haubrich is the Director of Data Science at Comparative Agility. Passionate about discovering the story behind the data, Matt has more than 25 years of experience in data analytics, survey research, and assessment design. Matt is a frequent speaker at numerous national and international conferences and brings a broad perspective of analytics from both public and private sectors.