The title of my post may read like acronym soup but all of these concepts play a critical role in SAFe, and understanding how they’re connected is important for successful SAFe implementation. After exploring some connections, I will suggest some actions you can take while designing, evaluating, or accelerating your implementation.

KPIs and OKRs

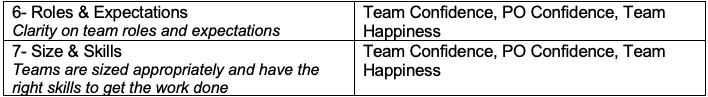

The SAFe Value Stream KPIs article describes Key Performance Indicators (KPIs) as “the quantifiable measures used to evaluate how a value stream is performing against its forecasted business outcomes.”

That includes:

- Health of day-to-day performance

- Work to create sustainable change in performance

Objectives and Key Results (OKRs) are meant to be about driving and evaluating change rather than maintaining the status quo. Therefore, they are a special kind of KPI. Objectives point towards the desired state. Key results measure progress towards that desired state.

But how do these different concepts map to SAFe’s Operational Value Streams (OVSs) and Development Value Streams (DVSs)? And why should you care?

Changing and Improving the Operation

Like Strategic Themes, most OKRs point to the desired change in business performance. These OKRs would be the ones that company leadership cares about. And they would be advanced through the efforts of a DVS (or multiple ones).

For example, if the business wants to move to a subscription/SaaS model, that’s a change in the operating model—a change in how the OVS looks and operates. That change is supported by the development of new systems and capabilities, which is work that will be accomplished by a DVS (or multiple ones).

This view enables us to recognize the wider application of the DVS concept that we talk about in SAFe 5. Business agility means using Agile and SAFe constructs to develop any sort of changing the business needs, regardless of whether that change includes IT or technology.

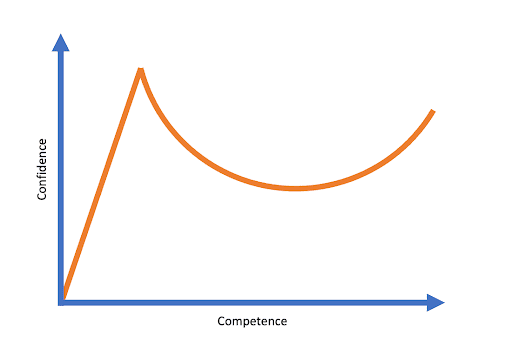

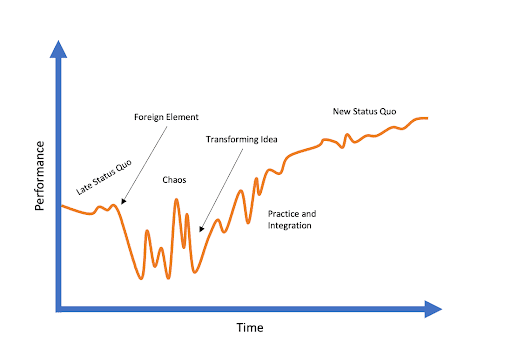

Whenever we are trying to change our operation, there’s a question about how much variability we’re expecting around this change. Is there more known than the unknown? Or vice versa? Are we making this change in an environment of volatility, uncertainty, complexity, and ambiguity? If yes, then using a DVS construct that employs empiricism to seek the right answers to how to achieve the OKR is essential, regardless of how much IT or technology is involved. We might have an OKR that requires business change involving mainly legal, marketing, procurement, HR, and so on, that would still benefit from an Agile and SAFe DVS approach.

These OKRs would then find themselves elaborated and advanced through the backlogs and backlog items in the various ARTs and teams involved in this OKR.

In some cases, an OKR would drive the creation of a focused DVS. This is the culmination of the Organize around Value Lean-Agile SAFe Principle. This is why Strategic Themes and OKRs should be an important consideration when trying to identify value streams and ARTs (in the Value Stream and ART identification workshop). And a significant new theme/OKR should trigger some rethinking of whether the current DVS network is optimally organized to support the new value creation goals set by the organization.

Maintaining the Health of the Operation

As mentioned earlier, maintaining the health of the operation is also tracked through KPIs. Here we expect stability and predictability in performance. It’s crucial work but it’s not what OKRs or Strategic Themes are about.

This work can be simple, complex, or even chaotic depending on the domain. The desire of any organization is to bring its operation under as much control as possible and minimize variability as it makes sense in the business domain. What this means is that in many cases, we don’t need Agile and empiricism in order to actually run the operation. Lean and flow techniques can still be useful to create sustainable, healthy flow (see more in the Organizational Agility competency).

Whenever people working in the OVS switch to improving the OVS (or in other words working on versus in the operation), they are, in essence, moving over implicitly to a DVS.

Some organizations make this duality explicit by creating a DVS that involves a combination of people who spend some of their time in the OVS and some of their time working on it together with people who are focused on working on the OVS. For example, an orthopedic clinic network in New England created a DVS comprising clinicians, doctors, PAs, and billing managers (that work the majority of their time in the OVS) together with IT professionals. Major improvements to the OVS happen in this DVS.

Improving the Development Value Stream

The DVS needs to relentlessly improve and learn as well. Examples of OKRs in this space could be: improving time-to-market, as measured by improved flow time or by improving the predictability of business value delivered, as measured by improved flow predictability. It could also be: organize around value, measured by the number of dependencies and the reduction in the number of Solution Trains required.

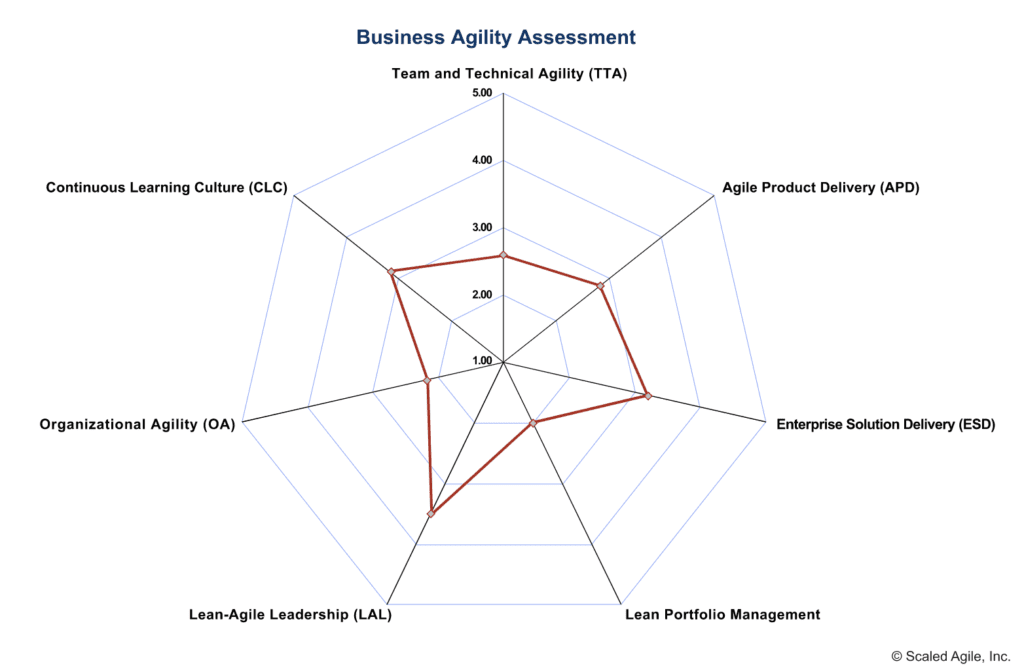

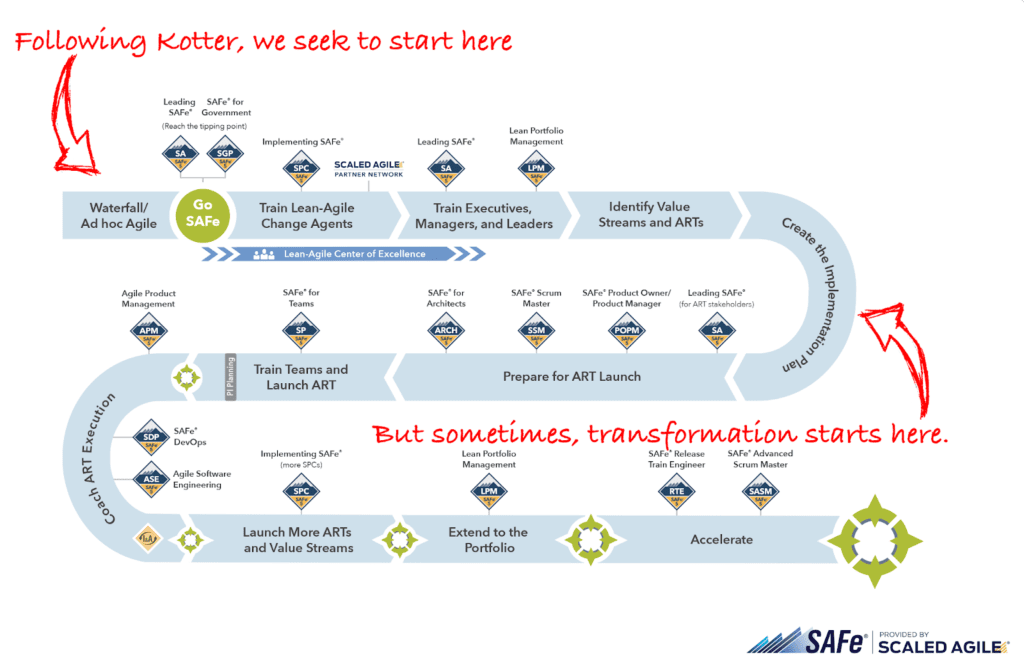

This is also where the SAFe transformation or Agile journey lives. There are ways to improve DVSs or the overall network of DVSs, creating a much-improved business capability to enhance its operation and advance business OKRs.

Implementing OKRs in this space relates more to enablers in the SAFe backlogs than to features or capabilities. Again, these OKRs change the way the DVS works.

Running the Development Value Stream

Similar metrics can be used as KPIs that help maintain the health of the DVS on an ongoing basis. For example, if technical debt is currently under control, a KPI monitoring it might suffice and hopefully will help avoid a major technical debt crisis. If we weren’t diligent enough to avoid the crisis, an objective could be put in place to significantly reduce the amount of technical debt. Achieving a certain threshold for a tech debt KPI could serve as a key result (KR) for this objective. Once it’s achieved, we might leave the tech debt KPI in place to maintain health.

It’s like continuing to monitor your weight after you’ve gone on a serious diet. During the diet, you have an objective of achieving a healthy weight with a KR tracking BMI and aiming to get below 25. After achieving your objective, you continue to track your BMI as a KPI.

Taking Action to Advance Your Implementation Using OKRs

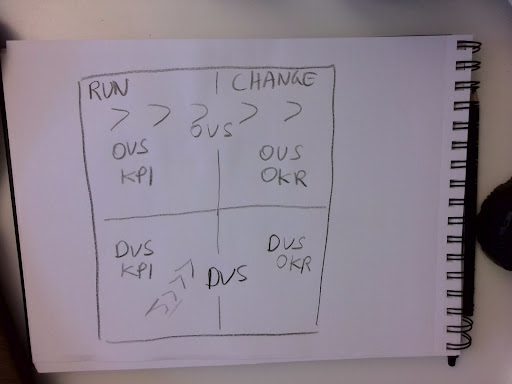

In this blog post, we explored the relationship between operational and development value streams and the Strategic Themes and OKRs. We’ve seen OVS KPIs and OKRs as well as DVS OKRs and KPIs.

A key step in accelerating business agility is to continually assess whether you’re optimally organized around value. OKRs can provide a very useful lens to use for this assessment.

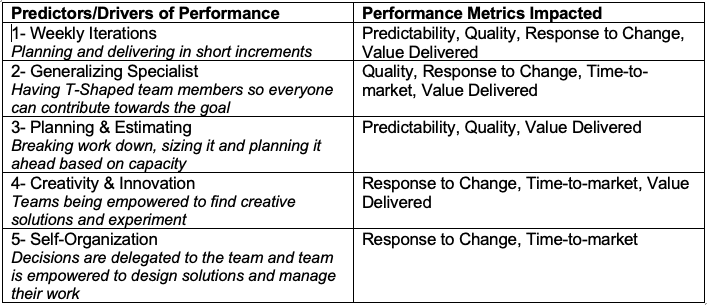

Start by reviewing your OKRs and KPIs and categorize them according to OVS/DVS/Change/Run.

You can use the matrix below.

If you find some OKRs on the left side of the matrix, it’s time to rethink.

Run-focused OKRs should actually be described as KPIs. Discuss the difference and whether you’re actually looking for meaningful change to these KPIs (in which case it really can be an OKR—but make sure it is well described as one) or are happy to just maintain a healthy status quo.

You can then consider your DVS network/ART/team topology. Is it sufficiently aligned with your OKRs/KPIs? Are there interesting opportunities to reorganize around value?

This process can also be used in a Value Stream Identification workshop for the initial design of the implementation or whenever you want to inspect and adapt it.

Find me on LinkedIn to learn more about making these connections in your SAFe context via an OKR workshop.

About Yuval Yeret

Yuval is a SAFe Fellow and the head of AgileSparks (a Scaled Agile Partner) in the United States where he leads enterprise-level Agile implementations. He’s also the steward of The AgileSparks Way and the firm’s SAFe, Flow/Kanban, and Agile Marketing. Find Yuval on LinkedIn.