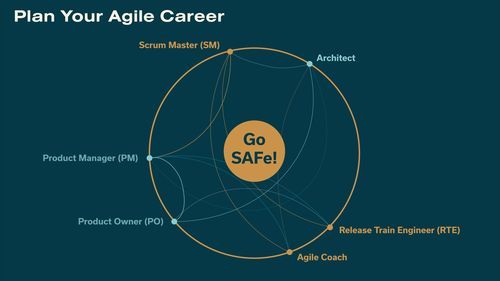

Over two decades, I have worked with large system builders in Aerospace, Defense, Automotive, and many other industries, supporting leaders in applying Lean-Agile principles to their engineering practices. As a methodologist and a SAFe® Fellow, I speak with organizations daily about using SAFe to build and deliver large, complex systems faster, more predictably, and with higher quality.

Hardware ≠ Software: Understanding the Evolution of Hardware Development

Hardware and Lean-Agile don’t immediately seem like a perfect fit. Building a hardware system involves a lot of risk and cost, and a huge amount of infrastructure is required to design, verify, validate, and ultimately certify hardware solutions.

The common approach to hardware development has been to define complete requirements and design specifications prior to implementation. There hasn’t been a mindset of building incrementally or doing tasks in small batches, mainly because of the downstream risk and the costs associated with getting it wrong. Yet the systems we build today have too much market, user, and technical uncertainty to assume we can build them right the first time.

Over the past decade, we’ve witnessed a real disruption in hardware. New technology has enabled the industry to embrace a more Agile way of working. Digital technology, including digital twins, allows organizations to build an entire digital model of a system to address uncertainty faster and more cost-effectively. While hardware teams may not be able to build a new part every iteration, rapid prototyping and 3D printing mean they can now quickly and cheaply test and iterate at increasing levels of fidelity.

At Scaled Agile, we saw a clear market need for a course that would support engineering organizations’ adoption of SAFe in the same way that many software organizations have seen tremendous value.

Supporting Organizations in Implementing Lean-Agile Principles in Hardware

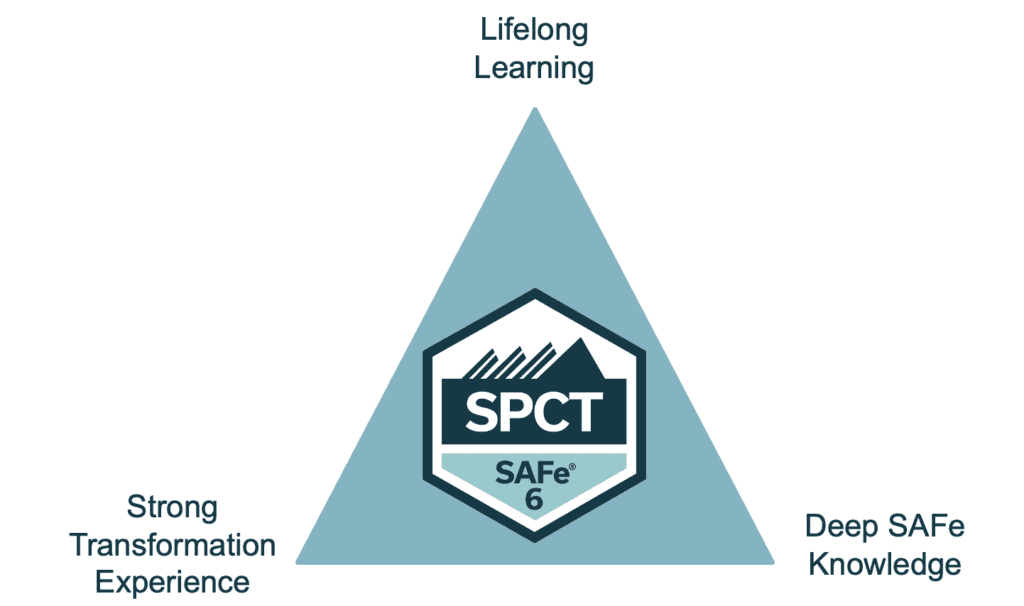

To create SAFe® for Hardware, we worked closely with our customers and the SPCT community, drawing on the conversations I’ve had over the past several years. The content in each module has been tested with multiple customers and reviewed by a community of SPCTs who work with hardware organizations. We landed on the modules that truly support engineering leaders and program managers in accelerating their hardware development and delivery and providing faster feedback and learning on the systems they build.

We start with a brief introduction to SAFe and Lean-Agile principles to level set the class. Next, we dive into Designing for Change, where we look at how engineering leaders can design their systems to optimize incremental development. A good example is to think about a mobile phone. Pre-smartphone era, mobile phones were fixed logic, while today — a phone is just a platform that can be continually improved. Too often, systems are designed to optimize initial costs over the ability to change, which limits the ability to evolve them over time based on feedback and learning.

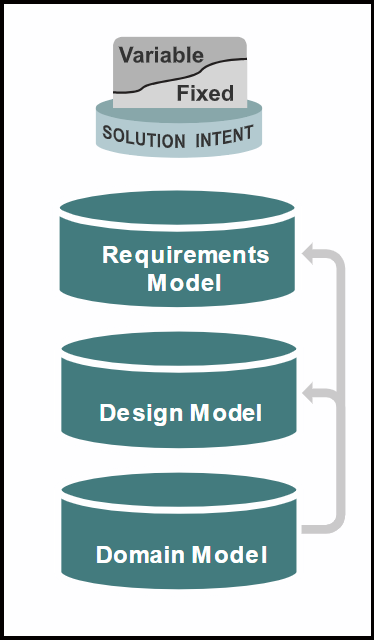

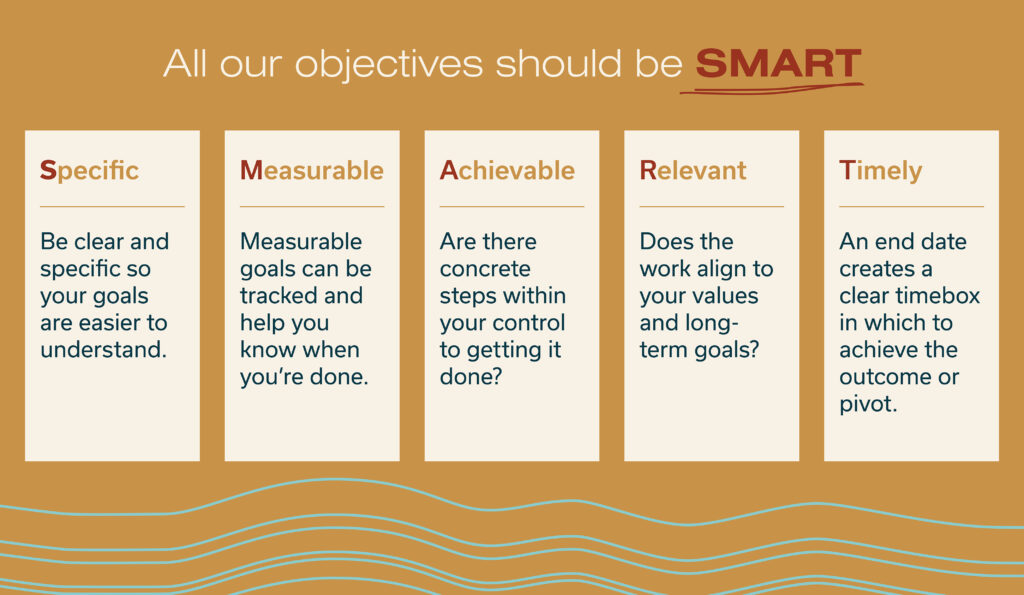

Our next obstacle is the specification process. In Specifying the Solution Incrementally, we show how smaller batches of requirements and design create feedback loops. This requires balancing and connecting traditional formal (‘shall’) specifications with backlog items like features and enablers. We also show how to incorporate nonfunctional requirements (NFRs) into the Agile way of working.

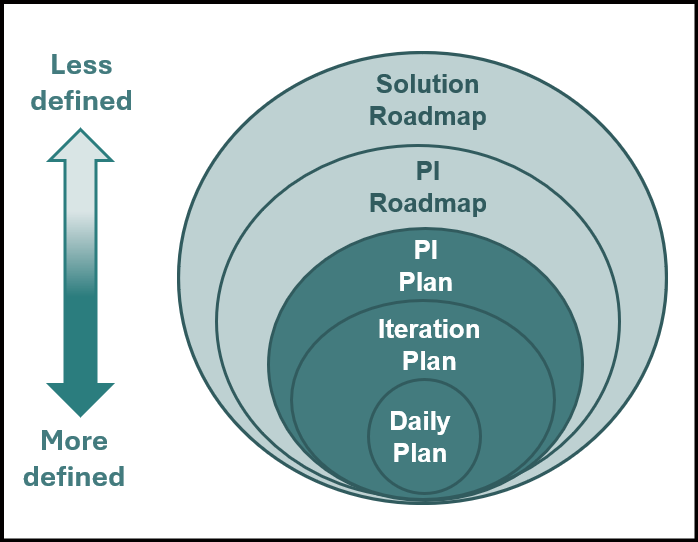

A crucial difference between software development and hardware development is dramatically longer lead times needed to manufacture physical parts. Further, these are often significant systems with multi-year and sometimes even multi-decade lifespans. Multiple Horizons of Planning shares best practices on how to plan with both a long-term and near-term perspective. We show how organizations can balance the need to forecast long-term while providing teams with the ability to plan and commit to shorter-term work.

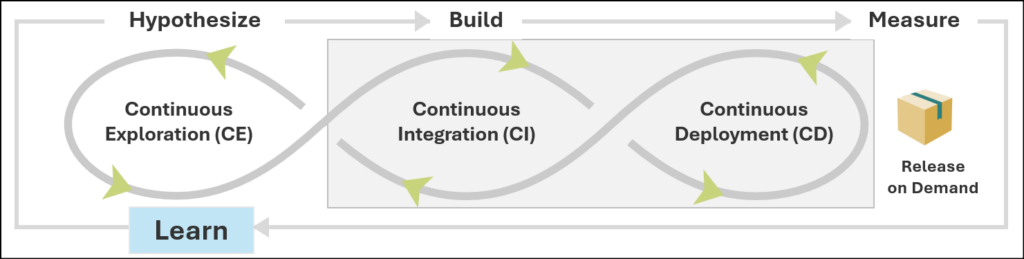

As we say in SAFe, objective evaluation of working systems is the true measure of progress. Other measures, including phase-gate milestones, too often lead to false positive feasibility and wishful thinking. Being Agile in hardware requires a critical mindset shift. One issue is that teams often work in isolation on their part of a system, and regularly wait until the end to integrate the system. When it doesn’t, rework can be costly and frustrating. During Frequently Integrating the End-to-End Solution, participants will understand how digital technologies described earlier enable more frequent integration and faster, cost-effective learning.

Another shift is in the way that engineering leaders look at compliance and regulation. Instead of creating a large bow wave of compliance activities at the end of development, we shift those activities left, building security, compliance, and physical safety into a feedback loop each increment. We discuss how to make this happen during the module Continually Addressing Compliance Concerns.

Of course, no engineering team is an island. All organizations recognize the need to leverage suppliers whose knowledge and expertise are required to co-develop the solution. As organizations change to Lean-Agile ways of working, they must bring their suppliers along with them to be successful. In Collaborating with Suppliers, engineering leaders will learn how to integrate suppliers into their SAFe practices. They will also understand common contracting challenges and ways to address them.

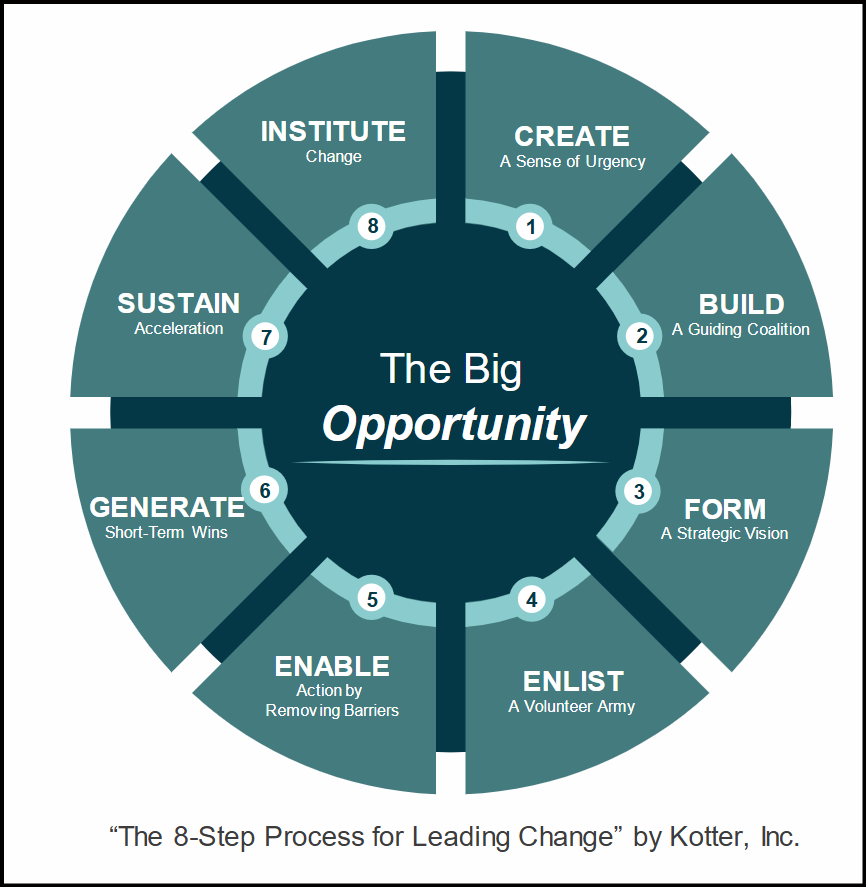

Perhaps most crucially of all, our module Leading the Change is a call to action for development managers, program managers, and engineering executives. It’s not enough to agree to a new way of working, leaders need to own it and lead that change.

Built to Reinforce Learning

While the focus of SAFe® for Hardware is on knowledge transfer and education, we purposely designed the course to be 40 percent collaborative, with a lot of hands-on and activity-based sessions where engineering practitioners can learn from one another. Each module provides opportunities for leaders to discuss their current challenges and consider new approaches.

In our testing, I’ve seen leaders who enter the class with such different perspectives and then get in the room and discuss Agile practices with excitement and passion. I had one attendee tell me he would apply the roadmapping workshop the following week. And seeing the excitement and energy while building a marble run exercise is just the icing on the cake! We’ve had feedback that the content in SAFe for Hardware has really helped engineering leaders start thinking differently and understanding what’s possible within hardware development.

For organizations to change their ways of working, leaders need to see the art of the possible, and to understand that applying Lean-Agile principles and SAFe in hardware is doable. And the need to know that others are already doing it and seeing success. I want people walking out of the course feeling confident that as leaders, if they can take responsibility for applying the mindset and the principles to their specific context and organization, they can find incredible value.

Visit the SAFe® for Hardware course page for more, or search for upcoming classes via the training calendar.

SAFe for Hardware is currently in Limited Release, with classes available from select partners. A full release is planned for February 2025.

About Harry Koehnemann

SAFe Methodologist and SAFe Fellow at Scaled Agile, Inc., Harry Koehnemann has worked for over two decades with large system builders in Aerospace, Defense, Automotive, and many other industries, supporting leaders in applying Lean-Agile principles to their engineering practices. Harry speaks with organizations daily about using SAFe to build and deliver large, complex systems faster, more predictably, and with higher quality.

![PI Objective Formula

[Activity] + [Scope] so that [Beneficiary] have [User Value] to [Business Value]](https://staging.scaledagile.com/wp-content/uploads/2022/11/SAi_Blog_Header_PI_Formula_103122-1-1024x244.jpg)